Tuesday, 28 July 2009

Sunday, 26 July 2009

SQL 2008 : Index Compression Cheat

Index compression is similar to table compression in terms of how you enable it, i.e. -

NB : I have done NO performance testing on the effects of compressing indexes.

This post is for information only, I have still to evaluate whether i'm compresssing indexes myself!

>>>>

Want to implement index compression on all Indexes in a sql 2008 db?

Here's dynamic sql to build those commands...

page compression...

row compression...

and to remove it again...

ALTER INDEX ALL ON schema.table REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);

NB : I have done NO performance testing on the effects of compressing indexes.

This post is for information only, I have still to evaluate whether i'm compresssing indexes myself!

>>>>

Want to implement index compression on all Indexes in a sql 2008 db?

Here's dynamic sql to build those commands...

page compression...

SELECT 'ALTER INDEX ALL ON [' + SCHEMA_NAME(schema_id) + '].[' + NAME + '] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE);' FROM sys.objects where TYPE = 'u'

row compression...

SELECT 'ALTER INDEX ALL ON [' + SCHEMA_NAME(schema_id) + '].[' + NAME + '] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = ROW);' FROM sys.objects where TYPE = 'u'

and to remove it again...

SELECT 'ALTER INDEX ALL ON [' + SCHEMA_NAME(schema_id) + '].[' + NAME + '] REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = NONE);' FROM sys.objects where TYPE = 'u'

Thursday, 23 July 2009

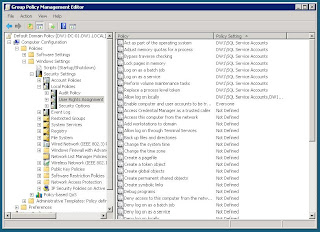

Multiple SQL Instances on Different IPs

The first thing I thought of to get additional SQL installations on the same server was to use different port numbers.

That would have been far too easy. Our application doesn't support that anyway.

Right, so the alternative approach is to leave the port the same (1433) and use a second IP address.

1) First of all, add your additional IP addresses on the host machine >

2) Configure SQL Server to use the new IP >

SQL Server Configuration Manager is the tool to use.

By using the 2008 version I can see the services & protocols for both the 2005 & 2008 instances on my machine.

By default SQL is listening on all ports and dynamically determining which to use.

We need to stop this behavior i.e restrict a SQL installation to a single port on a single port so that instances can co-exist.

Under each instance open the properties of TCP/IP

That would have been far too easy. Our application doesn't support that anyway.

Right, so the alternative approach is to leave the port the same (1433) and use a second IP address.

1) First of all, add your additional IP addresses on the host machine >

2) Configure SQL Server to use the new IP >

SQL Server Configuration Manager is the tool to use.

By using the 2008 version I can see the services & protocols for both the 2005 & 2008 instances on my machine.

By default SQL is listening on all ports and dynamically determining which to use.

We need to stop this behavior i.e restrict a SQL installation to a single port on a single port so that instances can co-exist.

Under each instance open the properties of TCP/IP

- Set "Listen All" = No.

- Set the desired IP and port

- Disable listening on IPs other than your chosen main one.

- Disable dynamic ports by removing 'o' from TCP Dynamic ports for all IP's (except for 127.0.0.1 and "IPAll")

- Restart SQL services.

Wednesday, 22 July 2009

Unique Constraint Failure Message

I hadn't noticed this before, the error message tells as where the rogue data is...

Msg 1505, Level 16, State 1, Line 3

The CREATE UNIQUE INDEX statement terminated because a duplicate key was found for the object name 'dbo.TrackingData' and the index name 'UniqueTrigger'. The duplicate key value is (2008-06-09 07:05:19, 350824225).

Msg 1750, Level 16, State 0, Line 3

Could not create constraint. See previous errors.

The statement has been terminated.

http://www.sql-server-performance.com/faq/create_unique_index_terminated_p1.aspx

Msg 1505, Level 16, State 1, Line 3

The CREATE UNIQUE INDEX statement terminated because a duplicate key was found for the object name 'dbo.TrackingData' and the index name 'UniqueTrigger'. The duplicate key value is (2008-06-09 07:05:19, 350824225).

Msg 1750, Level 16, State 0, Line 3

Could not create constraint. See previous errors.

The statement has been terminated.

http://www.sql-server-performance.com/faq/create_unique_index_terminated_p1.aspx

Monday, 20 July 2009

SQL 2008 : Intellisense (enabling & refreshing)

Enabling intellisense...

And once you have Intellisense, remember...

(that's the command to refresh the intellisense cache with new tables. objects etc)

links :

http://sqlblog.com/blogs/aaron_bertrand/archive/2009/07/01/why-is-intellisense-not-working.aspx

http://blogs.msdn.com/dtjones/archive/2008/09/11/refreshing-the-intellisense-cache.aspx

And once you have Intellisense, remember...

CTRL - SHIFT - R !!!

(that's the command to refresh the intellisense cache with new tables. objects etc)

links :

http://sqlblog.com/blogs/aaron_bertrand/archive/2009/07/01/why-is-intellisense-not-working.aspx

http://blogs.msdn.com/dtjones/archive/2008/09/11/refreshing-the-intellisense-cache.aspx

SQL 2008 : Backup Compression Optimizations

Inspired by this SQL Cat article I decided to see how quickly I could potentially perform backups.

I'm using the NUL output so backups are not written to disk.

1st test : Default Compression settings >

Processed 1222736 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 506 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223242 pages in 160.234 seconds (59.641 MB/sec).

2nd test : Up Buffercount to 50 >

Processed 1222768 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 259 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223027 pages in 107.928 seconds (88.530 MB/sec).

3rd test : Up Buffercount to 150

Processed 1222800 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 167 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1222967 pages in 98.587 seconds (96.913 MB/sec).

4th test : Up Buffercount to 250 >

Processed 1222832 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 263 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223095 pages in 96.788 seconds (98.725 MB/sec).

Miniscule Improvement (2s) on my system between BufferCount of 150 and 250

Links :

http://sqlcat.com/technicalnotes/archive/2008/04/21/tuning-the-performance-of-backup-compression-in-sql-server-2008.aspx

http://blogs.msdn.com/sqlcat/archive/2008/03/02/backup-more-than-1gb-per-second-using-sql2008-backup-compression.aspx

I'm using the NUL output so backups are not written to disk.

1st test : Default Compression settings >

BACKUP DATABASE MyDatabase TO DISK = 'NUL' WITH COMPRESSION

Processed 1222736 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 506 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223242 pages in 160.234 seconds (59.641 MB/sec).

2nd test : Up Buffercount to 50 >

BACKUP DATABASE MyDatabase TO DISK = 'NUL' WITH COMPRESSION, BUFFERCOUNT = 50

Processed 1222768 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 259 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223027 pages in 107.928 seconds (88.530 MB/sec).

3rd test : Up Buffercount to 150

BACKUP DATABASE MyDatabase TO DISK = 'NUL' WITH COMPRESSION, BUFFERCOUNT = 150

Processed 1222800 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 167 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1222967 pages in 98.587 seconds (96.913 MB/sec).

4th test : Up Buffercount to 250 >

BACKUP DATABASE MyDatabase TO DISK = 'NUL' WITH COMPRESSION, BUFFERCOUNT = 250

Processed 1222832 pages for database 'MyDatabase ', file 'MyDatabase ' on file 1.

Processed 263 pages for database 'MyDatabase ', file 'MyDatabase_log2' on file 1.

BACKUP DATABASE successfully processed 1223095 pages in 96.788 seconds (98.725 MB/sec).

Miniscule Improvement (2s) on my system between BufferCount of 150 and 250

Links :

http://sqlcat.com/technicalnotes/archive/2008/04/21/tuning-the-performance-of-backup-compression-in-sql-server-2008.aspx

http://blogs.msdn.com/sqlcat/archive/2008/03/02/backup-more-than-1gb-per-second-using-sql2008-backup-compression.aspx

Saturday, 18 July 2009

TSQL : Remove a user from all databases

Drop User from all databases on a server -

Drop the login -

EXEC sp_Msforeachdb "USE [?]; IF EXISTS (SELECT * FROM sys.database_principals WHERE name = N'USERNAME') DROP USER [USERNAME]" GO

Drop the login -

IF EXISTS (SELECT * FROM sys.server_principals WHERE name = N'USERNAME') DROP LOGIN [USERNAME] GO

Friday, 17 July 2009

Changing table schema

Sensible post today. Changing tables to a new schema >

To do for a given table -

For all tables -

To do for a given table -

ALTER SCHEMA [NewSchema] TRANSFER [tablename]

For all tables -

sp_MSforeachtable "PRINT '? modify'; ALTER SCHEMA [NewSchema] TRANSFER ?; IF @@ERROR = 0 PRINT '? modified'; PRINT ''"

Wednesday, 15 July 2009

SUBST for SSIS (eliminating location problems when deploying packages)

I'm setting up new SSIS instances and am keen to ensure that no files stray to the system drive.

On the new servers we have the following structure for SSIS packages on the D: drive >

D:\SSISDeployment

D:\SSISDeployment\Config

D:\SSISDev

Developing on my local pc I do not have a D: drive, hence I can mock one up using the old DOS subst command (yes, it still works!)

In my case SUBST D: C:\!rd\dev (I saved this in a batch file too).

Then I created my folder structure on my new D: drive.

This way there will be no problems moving packages and configuration to the servers.

I had to move my DVD-ROM drive from 'D:' to ‘ Z:’ to allow this.

Link : http://www.sqlservercentral.com/articles/Integration+Services/61619/

On the new servers we have the following structure for SSIS packages on the D: drive >

D:\SSISDeployment

D:\SSISDeployment\Config

D:\SSISDev

Developing on my local pc I do not have a D: drive, hence I can mock one up using the old DOS subst command (yes, it still works!)

In my case SUBST D: C:\!rd\dev (I saved this in a batch file too).

Then I created my folder structure on my new D: drive.

This way there will be no problems moving packages and configuration to the servers.

I had to move my DVD-ROM drive from 'D:' to ‘ Z:’ to allow this.

Link : http://www.sqlservercentral.com/articles/Integration+Services/61619/

Tuesday, 14 July 2009

Affinity & Affinity I/O - Some notes

Processor Affinity settings bind SQL Server activity to specific processors

If you are unlucky enough to be sharing a server with another application, this would be where to prevent SQL using all CPUs.

By default, the affinity settings are 'automatic' i.e. use all processors.

Affinity mask (Processor Affinity) - Controls processors as this can be degrative to performance.

Affinity I/O mask (I/O Affinity) - controls server I/O (you nominate processors to be used for i/o activity)

Never mark the same processors for affinity mask and affinity i/o mask. (see Technet link for details)

Technet : Affinity Mask Option

John Daskalakis : SQL Server 2008 and Processors

If you are unlucky enough to be sharing a server with another application, this would be where to prevent SQL using all CPUs.

By default, the affinity settings are 'automatic' i.e. use all processors.

Affinity mask (Processor Affinity) - Controls processors as this can be degrative to performance.

Affinity I/O mask (I/O Affinity) - controls server I/O (you nominate processors to be used for i/o activity)

Never mark the same processors for affinity mask and affinity i/o mask. (see Technet link for details)

Update 10/10/2010 :

Because changing I/O Affinity requires a restart of the SQL Server Service, The radio buttons 'Configured values' and 'Running values' at the bottom of the Affinity screen will show differences in configuration until the restart occurs.

Because changing I/O Affinity requires a restart of the SQL Server Service, The radio buttons 'Configured values' and 'Running values' at the bottom of the Affinity screen will show differences in configuration until the restart occurs.

Technet : Affinity Mask Option

John Daskalakis : SQL Server 2008 and Processors

Brad's sure Guide to Indexes

Brad's sure Guide to Indexes

Found this today, but cant find it anywhere else.

Maybe it's been withdrawn / combined into a newer publication.

https://forum.eviloctal.com/redirect.php?tid=37778&goto=lastpost

(not sure if they are allowed to host this here)

Found this today, but cant find it anywhere else.

Maybe it's been withdrawn / combined into a newer publication.

https://forum.eviloctal.com/redirect.php?tid=37778&goto=lastpost

(not sure if they are allowed to host this here)

Monday, 13 July 2009

Hyper-V : Time Syncronisation Settings

Hyper-V : Time Syncronisation Settings

How to disable your VM from syncing it's clock with the physical host...

Link : http://www.brentpabst.com/bp/2009/01/2 /TimeProblemWithDomainControllerInHyperV.aspx

How to disable your VM from syncing it's clock with the physical host...

Link : http://www.brentpabst.com/bp/2009/01/2 /TimeProblemWithDomainControllerInHyperV.aspx

Friday, 10 July 2009

SSRS - Reporting Services Formulae

For Reporting Services Parameters -

DateSerial - returns date format

=DateSerial(Year(Today()), Month(Today()), Day(Today()))

Useful link :

http://www.sqlteam.com/forums/topic.asp?TOPIC_ID=99696

Setting Default Date in SQL Reportng Services

http://weblogs.sqlteam.com/jhermiz/archive/2007/08/15/60289.aspx

DateSerial - returns date format

=DateSerial(Year(Today()), Month(Today()), Day(Today()))

Useful link :

http://www.sqlteam.com/forums/topic.asp?TOPIC_ID=99696

Setting Default Date in SQL Reportng Services

http://weblogs.sqlteam.com/jhermiz/archive/2007/08/15/60289.aspx

Thursday, 9 July 2009

VISIO 2007 add-in for SQL Server Infrastructure Diagrams

The VISIO 2007 add-in for SQL Server Infrastructure Diagrams is reviewed by James Rowland Jones here >

http://blogs.conchango.com/jamesrowlandjones/archive/2008/07/09/visio-2007-add-in-for-sql-server-infrastructure-diagrams.aspx

Very useful if you need to document & share sql config (which of course you do!)

http://blogs.conchango.com/jamesrowlandjones/archive/2008/07/09/visio-2007-add-in-for-sql-server-infrastructure-diagrams.aspx

Very useful if you need to document & share sql config (which of course you do!)

Wednesday, 8 July 2009

Data in the Buffer Cache

I found this script here > http://itknowledgeexchange.techtarget.com/sql-server/tag/syspartitions/

(Thank you Denny Cherry)

In my case, the script shows very low percentages, as our data moves very fast, expiring the cache all the time...

(Thank you Denny Cherry)

In my case, the script shows very low percentages, as our data moves very fast, expiring the cache all the time...

SELECT sys.tables.name TableName, sum(a.page_id)*8 AS MemorySpaceKB, SUM(sys.allocation_units.data_pages)*8 AS StorageSpaceKB, CASE WHEN SUM(sys.allocation_units.data_pages) <> 0 THEN SUM(a.page_id)/CAST(SUM(sys.allocation_units.data_pages) AS NUMERIC(18,2)) END AS ‘Percentage Of Object In Memory’ FROM (SELECT database_id, allocation_unit_id, COUNT(page_id) page_id FROM sys.dm_os_buffer_descriptors GROUP BY database_id, allocation_unit_id) a JOIN sys.allocation_units ON a.allocation_unit_id = sys.allocation_units.allocation_unit_id JOIN sys.partitions ON (sys.allocation_units.type IN (1,3) AND sys.allocation_units.container_id = sys.partitions.hobt_id) OR (sys.allocation_units.type = 2 AND sys.allocation_units.container_id = sys.partitions.partition_id) JOIN sys.tables ON sys.partitions.object_id = sys.tables.object_id AND sys.tables.is_ms_shipped = 0 WHERE a.database_id = DB_ID() GROUP BY sys.tables.name

Generating a list of sequential numbers in TSQL

Generating a list of sequential numbers.

A really interesting post, comparing techniques...

http://stackoverflow.com/questions/1393951/what-is-the-best-way-to-create-and-populate-a-numbers-table

The fastest (method 7) is reproduced below ;

A really interesting post, comparing techniques...

http://stackoverflow.com/questions/1393951/what-is-the-best-way-to-create-and-populate-a-numbers-table

The fastest (method 7) is reproduced below ;

DROP TABLE NumbersTest

DECLARE @RunDate datetime

SET @RunDate=GETDATE()

SELECT TOP 10000 IDENTITY(int,1,1) AS Number

INTO NumbersTest

FROM sys.objects s1

CROSS JOIN sys.objects s2

ALTER TABLE NumbersTest ADD CONSTRAINT PK_NumbersTest PRIMARY KEY CLUSTERED (Number)

PRINT CONVERT(varchar(20),datediff(ms,@RunDate,GETDATE()))+' milliseconds'

SELECT COUNT(*) FROM NumbersTest

Tuesday, 7 July 2009

TSQL : Tables, Columns and Datatypes

I wanted to locate tables with datetime columns to evaluate my partitioning approach. This this the code I used.

Tables, Columns and Datatypes

Tables, Columns and Datatypes

SELECT sys_schemas.Name as [schema_name] , sys_tables.Name AS [table_name] , sys_columns.Name AS [column_name] , sys_types.Name AS [datatype_name] FROM Sys.Tables sys_tables JOIN Sys.Schemas sys_schemas ON sys_schemas.Schema_Id = sys_tables.Schema_Id JOIN Sys.Columns sys_columns ON sys_columns.Object_Id = sys_tables.Object_Id JOIN Sys.Types sys_types ON sys_types.System_Type_Id = sys_columns.System_Type_Id WHERE sys_types.Name = 'datetime' ORDER BY sys_schemas.Name, sys_tables.Name, sys_columns.Name, sys_types.Name

Friday, 3 July 2009

Internal product development names for SQL Server

Internal product development names for SQL Server >

6.5 Hydra

7.0 Sphinx

2000 Shiloh

2005 Yukon

2008 Katmai

2008R2 Kilimanjaro

2012 Denali

These and those for other Microsoft products, here.

6.5 Hydra

7.0 Sphinx

2000 Shiloh

2005 Yukon

2008 Katmai

2008R2 Kilimanjaro

2012 Denali

These and those for other Microsoft products, here.

Thursday, 2 July 2009

SQL 2008 Table Compression - Real World Tryout

Taking an existing import system, I ran the same test 3 times against an empty databases.

The database was presized to 25MB and shrunk afer each test so that data growth operations would not effect timings.

The test was simply running some existing code for 1 iteration.

It inserted 76015 rows across 15 separate tables.

Uncompressed

This is purely to demonstrate the effects of compression.

The figures are only representative of the test system.

Further efficiency gains can be made with the import

The database was presized to 25MB and shrunk afer each test so that data growth operations would not effect timings.

The test was simply running some existing code for 1 iteration.

It inserted 76015 rows across 15 separate tables.

Uncompressed

- Data Size : 14656 KB

- Duration : 79s (962 rows/second)

- Data Size : 12224 KB (83.4%, saving of 16.6%)

- Duration : 82s (increase of 4%, 927 rows/second)

- Data Size : 8512 KB (58.1%, saving of 41.9%)

- Duration : 87s (increase of 10%, 873 rows/second)

This is purely to demonstrate the effects of compression.

The figures are only representative of the test system.

Further efficiency gains can be made with the import

- simplifying it

- making import processes run in parallel.

- placing it on a faster disk subsystem

TSQL : Remote Debugging

I accidently hit debug rather than Execute in management studio today.

The result was this -

Being on a corporate network I cannot get the ports open or permissions on the development box to sort this, but here are the links anyway ...

Links :

How to Set Up Remote Debugging

Remote Debugging Across Domains

The result was this -

Being on a corporate network I cannot get the ports open or permissions on the development box to sort this, but here are the links anyway ...

Links :

How to Set Up Remote Debugging

Remote Debugging Across Domains

Wednesday, 1 July 2009

TSQL : Index Size Script

returns types and sizes of indexes...

SELECT sys_schemas.name AS SchemaName

,sys_objects.name AS TableName

,sys_indexes.name AS IndexName

,sys_indexes.type_desc AS IndexType

,partition_stats.used_page_count * 8 AS IndexSizeKB

,CAST(partition_stats.used_page_count * 8 / 1024.00 AS Decimal(10,3))AS IndexSizeMB

,CAST(partition_stats.used_page_count * 8 / 1048576.00 AS Decimal(10,3)) AS IndexSizeGB

FROM sys.dm_db_partition_stats partition_stats

INNER JOIN sys.indexes sys_indexes

ON partition_stats.[object_id] = sys_indexes.[object_id]

AND partition_stats.index_id = sys_indexes.index_id

AND sys_indexes.type_desc <> 'HEAP'

INNER JOIN sys.objects sys_objects

ON sys_objects.[object_id] = partition_stats.[object_id]

INNER JOIN sys.schemas sys_schemas

ON sys_objects.[schema_id] = sys_schemas.[schema_id]

AND sys_schemas.name <> 'SYS'

--WHERE partition_stats.[object_id] = object_id('dbo.TableName')

ORDER BY 1,2,3,4

Subscribe to:

Comments (Atom)